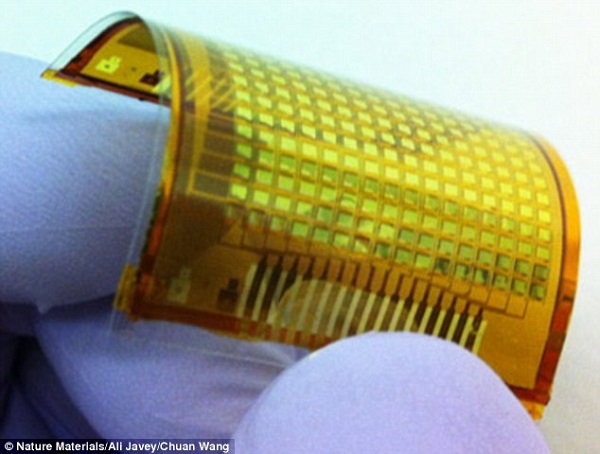

Scientists from University of California, Berkeley, have developed a type of e-skin that provides visual feedback when touched or pressed.

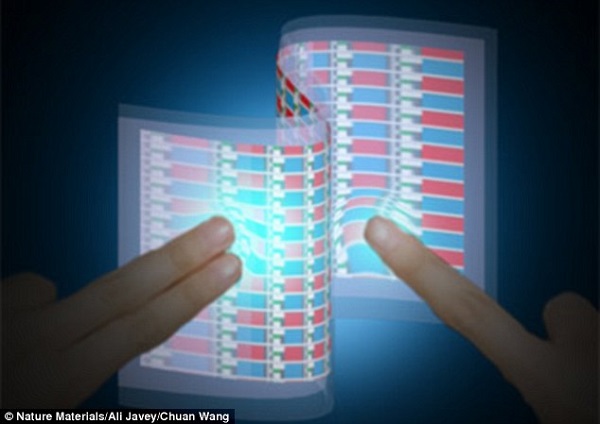

Despite the incredible thinness of the e-skin developed at UC Berkley, OLEDs were built in for giving visual signals when interacted with. Basically, the electronic skin includes a layer of polymer thinner than a sheet of paper, melted onto the top of a strip of silicon. As soon as the plastic gets harder, electronic circuits are printed on the skin.

Some of the possible applications for this technology include smartwatches, smartphones, car dashboards and prosthetic limbs. On top of that, covering robots with electronic skin could help them actually feel when they touch or are touched. Not at last, such a film would make a great interactive wallpaper.

The pressure sensor is connected to the OLEDs that light up when a force is felt. The intensity of the light rises at the same time as the pressure.

Co-lead author Chuan Wang claims that this technology could even make its way in the medical field: “I could also imagine an e-skin bandage applied to an arm as a health monitor that continuously checks blood pressure and pulse rates.”

Ali Javey, the professor of electrical engineering and computer sciences from University of California, Berkeley who developed the e-skin, stated in an interview for MIT Technology Review: “We are not just making devices; we are building systems. With the interactive e-skin, we have demonstrated an elegant system on plastic that can be wrapped around different objects to enable a new form of human-machine interfacing.”

The concept of electronic skin is not entirely new, nor is Ali Javey the one who invented it. Three years ago, Massachusetts-based engineering firm MC10 developed flexible electronic circuits that attach to people’s skin via rubber stamps. In the following video from 2011 you can see Ali Javey demonstrating to MIT Technology Review how e-skin can be printed. Needless to say, in the two years that have passed since then the

I am really curious as of what the scientists have in store for us (or the robots) in the future. Implementing into robots devices that would make them hear or see wasn’t that difficult. Now they’ll get the sense of touch. If anyone figures out how to make them taste and smell, plenty of people will become unemployed, leaving the Skynet scenario aside.

If you liked this post, please check this super-sleek futuristic mobile phone concept and the DARPA prosthetic arm capable of sensing touch.